I will be the one to say it. AI disappointed most of us in 2024. We started the year with Chatbots that were good enough to be entertaining but not good enough to replace a human when accuracy or creativity were important and we ended the year with… well, the same thing. The conversations are a little more entertaining, but the world has not been changed.

In 2023 and 2024 we were so enthralled by the first couple leaps of Generative AI that it seemed likely that getting rid of hallucinations, creating reasoning through the use of agents and multi-step thinking (like OpenAI o1) would very soon propel us to another giant leap. Instead, the growth has been much more incremental.

I still believe that AI is going to change the world. This is a common problem in technology. I’m very much reminded of Bill Gates’ quote, “Most people overestimate what they can do in one year and underestimate what they can do in ten years.” This is where the first part of my key to AI Investment comes in, patience.

Patience will be required in two ways.

First, there’s the obvious, don’t give up on AI. The underlying technologies are expanding at an unbelievable rate; AI’s ability to reason jumped forward in 2024 (I posted about OpenAI o1), Agentic AI showed potential, an AI effectively won the nobel prize for Chemistry, and the legal frameworks for AI are starting to come together. There may be more work required to make AI practical than we thought, but that work is being done.

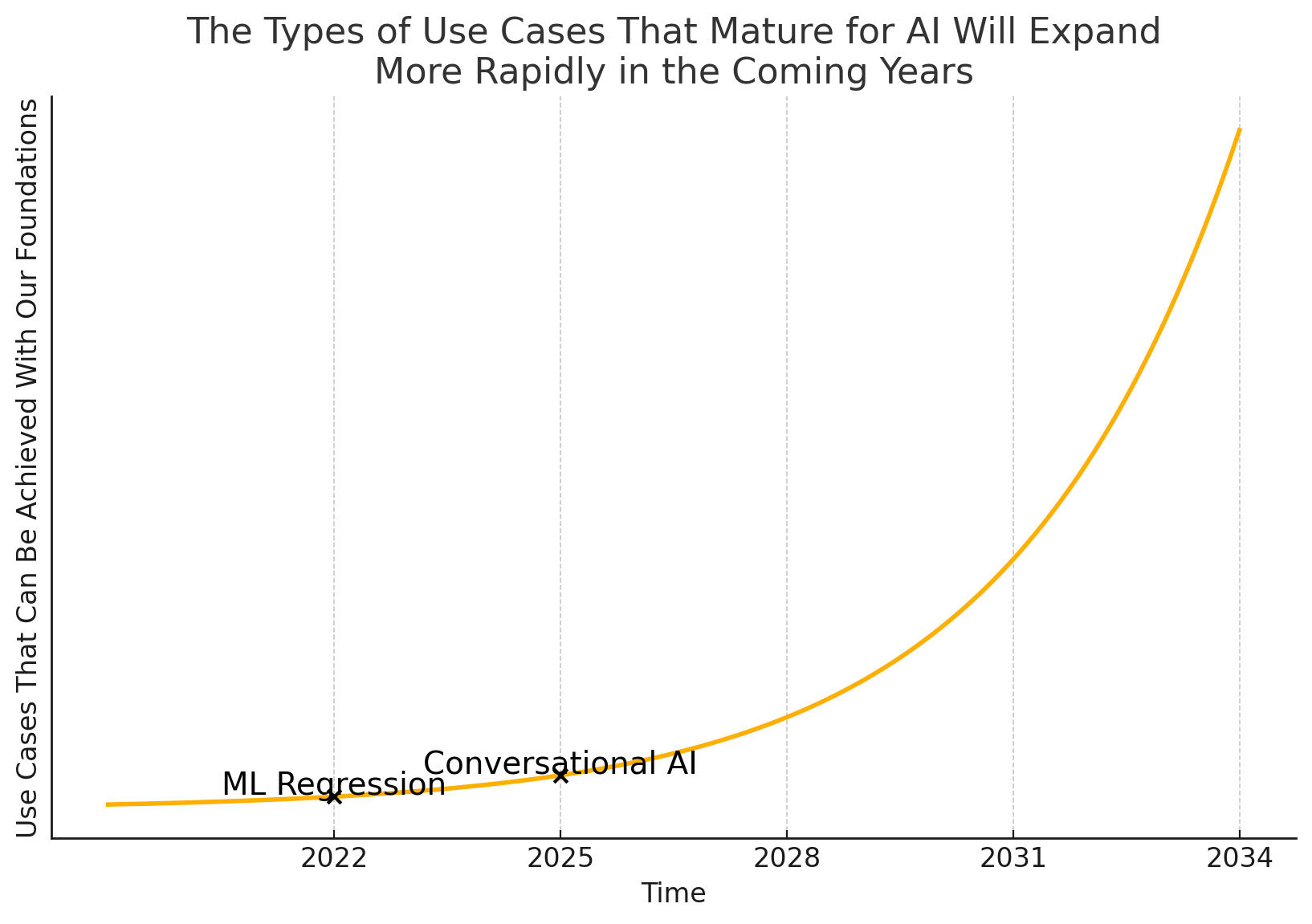

The second way that patience is required is a little bit less obvious. You need to exercise patience in not throwing good money after bad on Use Cases that are impossible today. One thing that has become clear about AI is that it takes an ecosystem to achieve a use case. Unless you’re NVIDIA or Google you’re not creating your own LLMs, building your own GPUs, writing your own vector algorithms, etc… You have to use what you can buy. I consider all of these things to be your “AI Foundations”… no matter how hard you try as a company, some use cases are just not possible with the current set of AI Foundations.

Think of it using this chart:

There are some use cases that are just above the yellow line and not practical to consider today. This is particularly true for use cases for which there isn’t “partial” value. Think about one of the most talked about use cases… replacing your software developers with AI Agents. It’s just not feasible yet with the foundations that we have. The LLMs are not creative enough, the understanding of requirements is not deep enough, the reasoning is not up to the level of critical thinking. Additionally, this problem doesn’t have interim value milestones as currently framed. Either the AI can perform as a software developer or it can’t. It doesn’t make my application better if it submits code that is only 40% right and fails all the tests.

Patience is necessary to avoid the mistake I’ve seen companies make; they pour more and more resources into these impossible use cases because they see the AI agent go from 40% right to 43% right. They make these incremental gains with painstaking analysis of prompts and patches like adversarial AI. It will never make be close enough to 100% to be used without a fundamental shift in the AI Foundations that we’re building on. Unfortunately, when that shift happens all the work you did on this set of foundations may or may not be applicable. For example, a lot of the prompts written to make ChatGPT 4 try to reason don’t make any sense when they’re fed to ChatGPT o1… so people are just throwing them away.

I know what most of you are thinking… my two recommendations seem to contradict each other. On one hand, I’m saying that AI will be important and you need to continue to invest in it. On the other hand, I’m saying that you should stop investing in many of the AI use cases you think would be most valuable because they’re infeasible. The trick to deploying your 2025 AI investments will be in “The Key to AI Investment in 2025 Part II: Preparation”